Overlays over templating

As Kubernetes grew in popularity, people started looking for effective ways to manage their application manifests, their declarative descriptions of the Kubernetes resources they needed to run their apps on a cluster.

There’s a bit of difficulty in that — how can I use the same app manifests to target multiple environments? How can I produce multiple variants?

The most common solution is to use some kind of templating, usually Go templates along with Helm. Helm comes with many additional drawbacks and advantages, but we’re going to ignore those for today. We’re going to focus on the problems with templating.

An Alternative API

If used by enough people, you arrive at parameterizing every value in a template. At this point, you’ve provided an alternative API schema that contains an out-of-date subset of the full API [1].

Here’s a bit of the Datadog Helm chart while you ponder that:

1{{- template "check-version" . }}2{{- if .Values.agents.enabled }}3{{- if (or (.Values.datadog.apiKeyExistingSecret) (.Values.datadog.apiKey)) }}4apiVersion: apps/v15kind: DaemonSet6metadata:7 name: {{ template "datadog.fullname" . }}8 namespace: {{ .Release.Namespace }}9 labels:10{{ include "datadog.labels" . | indent 4 }}11 app.kubernetes.io/component: agent12 {{- if .Values.agents.additionalLabels }}13{{ toYaml .Values.agents.additionalLabels | indent 4 }}14 {{- end }}15{{ include "provider-labels" . | indent 4 }}16 {{- if .Values.agents.daemonsetAnnotations }}17 annotations: {{ toYaml .Values.agents.daemonsetAnnotations | nindent 4 }}18 {{- end }}19spec:20 revisionHistoryLimit: {{ .Values.agents.revisionHistoryLimit }}21 selector:22 matchLabels:23 app: {{ template "datadog.fullname" . }}24 {{- if .Values.agents.podLabels }}25{{ toYaml .Values.agents.podLabels | indent 6 }}26 {{- end }}27 template:28 metadata:29 labels:30{{ include "datadog.template-labels" . | indent 8 }}31 app.kubernetes.io/component: agent32 admission.datadoghq.com/enabled: "false"33 app: {{ template "datadog.fullname" . }}34 {{- if .Values.agents.podLabels }}35{{ toYaml .Values.agents.podLabels | indent 8 }}36 {{- end }}37 {{- if .Values.agents.additionalLabels }}38{{ toYaml .Values.agents.additionalLabels | indent 8 }}39 {{- end }}40{{ (include "provider-labels" .) | indent 8 }}41 name: {{ template "datadog.fullname" . }}42 annotations:43 checksum/clusteragent_token: {{ include (print $.Template.BasePath "/secret-cluster-agent-token.yaml") . | sha256sum }}44 {{- if not .Values.datadog.apiKeyExistingSecret }}45 checksum/api_key: {{ include (print $.Template.BasePath "/secret-api-key.yaml") . | sha256sum }}46 {{- end }}47 checksum/install_info: {{ printf "%s-%s" .Chart.Name .Chart.Version | sha256sum }}48 checksum/autoconf-config: {{ tpl (toYaml .Values.datadog.autoconf) . | sha256sum }}49 checksum/confd-config: {{ tpl (toYaml .Values.datadog.confd) . | sha256sum }}50 checksum/checksd-config: {{ tpl (toYaml .Values.datadog.checksd) . | sha256sum }}51 {{- if eq (include "should-enable-otel-agent" .) "true" }}52 checksum/otel-config: {{ include "otel-agent-config-configmap-content" . | sha256sum }}53 {{- end }}54 {{- if .Values.agents.customAgentConfig }}55 checksum/agent-config: {{ tpl (toYaml .Values.agents.customAgentConfig) . | sha256sum }}56 {{- end }}57 {{- if eq (include "should-enable-system-probe" .) "true" }}58 {{- if .Values.agents.podSecurity.apparmor.enabled }}59 container.apparmor.security.beta.kubernetes.io/system-probe: {{ .Values.datadog.systemProbe.apparmor }}60 {{- end }}61 {{- if semverCompare "<1.19.0" .Capabilities.KubeVersion.Version }}62 container.seccomp.security.alpha.kubernetes.io/system-probe: {{ .Values.datadog.systemProbe.seccomp }}63 {{- end }}64 {{- end }}65 {{- if and .Values.agents.podSecurity.apparmor.enabled .Values.datadog.sbom.containerImage.uncompressedLayersSupport }}66 container.apparmor.security.beta.kubernetes.io/agent: unconfined67 {{- end }}68 {{- if .Values.agents.podAnnotations }}69{{ tpl (toYaml .Values.agents.podAnnotations) . | indent 8 }}70 {{- end }}71 spec:72 {{- if .Values.agents.shareProcessNamespace }}73 shareProcessNamespace: {{ .Values.agents.shareProcessNamespace }}74 {{- end }}75 {{- if .Values.datadog.securityContext -}}76 {{ include "generate-security-context" (dict "securityContext" .Values.datadog.securityContext "targetSystem" .Values.targetSystem "seccomp" "" "kubeversion" .Capabilities.KubeVersion.Version ) | nindent 6 }}77 {{- else if or .Values.agents.podSecurity.podSecurityPolicy.create .Values.agents.podSecurity.securityContextConstraints.create -}}78 {{- if .Values.agents.podSecurity.securityContext }}79 {{- if .Values.agents.podSecurity.securityContext.seLinuxOptions }}80 securityContext:81 seLinuxOptions:82{{ toYaml .Values.agents.podSecurity.securityContext.seLinuxOptions | indent 10 }}83 {{- end }}84 {{- else if .Values.agents.podSecurity.seLinuxContext }}85 {{- if .Values.agents.podSecurity.seLinuxContext.seLinuxOptions }}86 securityContext:87 seLinuxOptions:88{{ toYaml .Values.agents.podSecurity.seLinuxContext.seLinuxOptions | indent 10 }}89 {{- end }}90 {{- end }}91 {{- else if eq (include "is-openshift" .) "true"}}92 securityContext:93 seLinuxOptions:94 user: "system_u"95 role: "system_r"96 type: "spc_t"97 level: "s0"98 {{- end }}99 {{- if .Values.agents.useHostNetwork }}100 hostNetwork: {{ .Values.agents.useHostNetwork }}101 dnsPolicy: ClusterFirstWithHostNet102 {{- end }}103 {{- if .Values.agents.dnsConfig }}104 dnsConfig:105{{ toYaml .Values.agents.dnsConfig | indent 8 }}106 {{- end }}107 {{- if (eq (include "should-enable-host-pid" .) "true") }}108 hostPID: true109 {{- end }}110 {{- if .Values.agents.image.pullSecrets }}111 imagePullSecrets:112{{ toYaml .Values.agents.image.pullSecrets | indent 8 }}113 {{- end }}114 {{- if or .Values.agents.priorityClassCreate .Values.agents.priorityClassName }}115 priorityClassName: {{ .Values.agents.priorityClassName | default (include "datadog.fullname" . ) }}116 {{- end }}117 containers:118 {{- include "container-agent" . | nindent 6 }}119 {{- if eq (include "should-enable-trace-agent" .) "true" }}120 {{- include "container-trace-agent" . | nindent 6 }}121 {{- end }}122 {{- if eq (include "should-enable-fips" .) "true" }}123 {{- include "fips-proxy" . | nindent 6 }}124 {{- end }}125 {{- if eq (include "should-enable-process-agent" .) "true" }}126 {{- include "container-process-agent" . | nindent 6 }}127 {{- end }}128 {{- if eq (include "should-enable-system-probe" .) "true" }}129 {{- include "container-system-probe" . | nindent 6 }}130 {{- end }}131 {{- if eq (include "should-enable-security-agent" .) "true" }}132 {{- include "container-security-agent" . | nindent 6 }}133 {{- end }}134 {{- if eq (include "should-enable-otel-agent" .) "true" }}135 {{- include "container-otel-agent" . | nindent 6 }}136 {{- end }}137 initContainers:138 {{- if eq .Values.targetSystem "windows" }}139 {{ include "containers-init-windows" . | nindent 6 }}140 {{- end }}141 {{- if eq .Values.targetSystem "linux" }}142 {{ include "containers-init-linux" . | nindent 6 }}143 {{- end }}144 {{- if and (eq (include "should-enable-system-probe" .) "true") (eq .Values.datadog.systemProbe.seccomp "localhost/system-probe") }}145 {{ include "system-probe-init" . | nindent 6 }}146 {{- end }}147 volumes:148 {{- if (not .Values.providers.gke.autopilot) }}149 - name: auth-token150 emptyDir: {}151 {{- end }}152 - name: installinfo153 configMap:154 name: {{ include "agents-install-info-configmap-name" . }}155 - name: config156 emptyDir: {}157 {{- if .Values.datadog.checksd }}158 - name: checksd159 configMap:160 name: {{ include "datadog-checksd-configmap-name" . }}161 {{- end }}162 {{- if .Values.agents.useConfigMap }}163 - name: datadog-yaml164 configMap:165 name: {{ include "agents-useConfigMap-configmap-name" . }}166 {{- end }}167 {{- if eq .Values.targetSystem "windows" }}168 {{ include "daemonset-volumes-windows" . | nindent 6 }}169 {{- end }}170 {{- if eq .Values.targetSystem "linux" }}171 {{ include "daemonset-volumes-linux" . | nindent 6 }}172 {{- end }}173 {{- if eq (include "should-enable-otel-agent" .) "true" }}174 - name: otelconfig175 configMap:176 name: {{ include "agents-install-otel-configmap-name" . }}177 items:178 - key: otel-config.yaml179 path: otel-config.yaml180 {{- end }}181{{- if .Values.agents.volumes }}182{{ toYaml .Values.agents.volumes | indent 6 }}183{{- end }}184 tolerations:185 {{- if eq .Values.targetSystem "windows" }}186 - effect: NoSchedule187 key: node.kubernetes.io/os188 value: windows189 operator: Equal190 {{- end }}191 {{- if .Values.agents.tolerations }}192{{ toYaml .Values.agents.tolerations | indent 6 }}193 {{- end }}194 affinity:195{{ toYaml .Values.agents.affinity | indent 8 }}196 serviceAccountName: {{ include "agents.serviceAccountName" . | quote }}197 {{- if .Values.agents.rbac.create }}198 automountServiceAccountToken: {{.Values.agents.rbac.automountServiceAccountToken }}199 {{- end }}200 nodeSelector:201 {{ template "label.os" . }}: {{ .Values.targetSystem }}202 {{- if .Values.agents.nodeSelector }}203{{ toYaml .Values.agents.nodeSelector | indent 8 }}204 {{- end }}205 updateStrategy:206{{ toYaml .Values.agents.updateStrategy | indent 4 }}207{{ end }}208{{ end }}To be fair the above is not the API provided by the Helm chart (that would be the values.yaml), but on every Helm chart I’ve worked on I’ve had to dive into the templates. And a template plus its values is difficult to reason about, more difficult than the manifests themselves.

Tooling

Bad tooling is a corollary of providing an alternative API.

Most tooling will be built off of the original API, and so the community has to build plugins (e.g. helm-kubeval).

I think the community has actually done a very good job of this, and I would credit that to Helm’s usefulness as a package manager. But why is it a templating tool and a package manager? And do we need a package manager for deploying our bespoke (in-house) applications?

Overlays

Overlays are a way to accomplish our original goal — producing variants — without templating.

You have a base, an overlay, and the variant. The base and the variant are in the same language, and the overlay operation should be crystal clear. Probably more easily understood through an example.

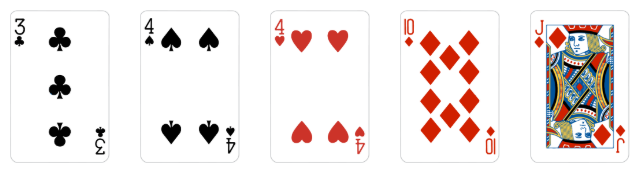

Imagine some card game, with rounds. You start with this hand (the base).

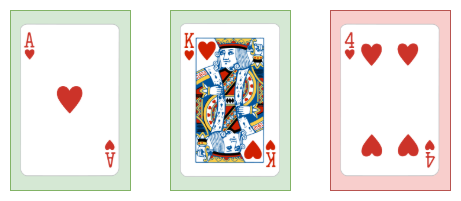

In 1 round, you experience the following change (the overlay):

Your resulting hand would be this:

Both the initial hand and the resulting hand — the base and the variant — are clear to anybody who gets cards. At no point is there a poorly-maintained template.

This idea, of having the input and the variant be in the same language, is the core of declarative application management (DAM). As the white paper on this topic states, the ideal tool should contain the ability to instantiate multiple variants while exposing and teaching the Kubernetes APIs [1]. Templating does allow for variants, but it obfuscates the APIs.

Kustomize as an implementation of DAM

The tool built from the principles in the Declarative App Management white paper is Kustomize. In Kustomize you define a base — a set of Kubernetes resources. You then apply one or more overlays to customize the base in ways that you’d obviously want to in Kubernetes, like add resources or labels or annotations. You produce a variant, usually ready for a specific environment.

They key thing that makes this a great tool is that the input is crystal clear (it’s just normal Kubernetes manifests). Overlays are similarly easy to understand. The whole process is well-scoped and easily understandable—the tool is not doing more than it should.

Example

Here’s my own adapted version of Kustomize’s hello world, after playing around with it for a while. It’s a really fun tool to play around with.

Prerequisites

Install kustomize and kubectl. You can also optionally run a Kubernetes cluster.

$ brew install kustomize$ brew install kubectl

$ kubectl config current-contextgke_atomic-commits_us-central1-c_my-first-cluster-1Clone the repo

$ git clone <https://github.com/alexhwoods/kustomize-example.git>...

$ kustomize build baseAnd you should see the full base kustomization. A kustomization is a kustomization.yaml file, or a directory containing one of those files. You can think of it as a general set of Kubernetes resources, with some extra metadata.

1apiVersion: v12data:3 altGreeting: Good Morning!4 enableRisky: "false"5kind: ConfigMap6metadata:7 labels:8 app: hello9 name: monopole-map10---11apiVersion: v112kind: Service13metadata:14 labels:15 app: hello16 name: monopole17spec:18 ports:19 - port: 8020 protocol: TCP21 targetPort: 808022 selector:23 app: hello24 deployment: hello25 type: LoadBalancer26---27apiVersion: apps/v128kind: Deployment29metadata:30 labels:31 app: hello32 name: monopole33spec:34 replicas: 335 selector:36 matchLabels:37 app: hello38 deployment: hello39 template:40 metadata:41 labels:42 app: hello43 deployment: hello44 spec:45 containers:46 - command:47 - /hello48 - --port=808049 - --enableRiskyFeature=$(ENABLE_RISKY)50 env:51 - name: ALT_GREETING52 valueFrom:53 configMapKeyRef:54 key: altGreeting55 name: monopole-map56 - name: ENABLE_RISKY57 valueFrom:58 configMapKeyRef:59 key: enableRisky60 name: monopole-map61 image: monopole/hello:162 name: monopole63 ports:64 - containerPort: 8080We have a ConfigMap, a Service of type LoadBalancer, and a Deployment. Let’s deploy just the base, and see what we get.

# create a namespace to mess around in$ kubectl create ns kustomize-example-only-the-base

# apply all resources in the kustomization to the cluster$ kustomize build base | kubectl apply -n kustomize-example-only-the-base -f -configmap/monopole-map createdservice/monopole createddeployment.apps/monopole created

# is everything there?$ kubectl get all -n kustomize-example-only-the-baseNAME READY STATUS RESTARTS AGEpod/monopole-647458c669-hhkhr 1/1 Running 0 11mpod/monopole-647458c669-k6d4c 1/1 Running 0 11mpod/monopole-647458c669-zmcxg 1/1 Running 0 11m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEservice/monopole LoadBalancer 10.105.8.138 34.72.203.252 80:32142/TCP 11m

NAME READY UP-TO-DATE AVAILABLE AGEdeployment.apps/monopole 3/3 3 3 11m

NAME DESIRED CURRENT READY AGEreplicaset.apps/monopole-647458c669 3 3 3 11mNow if I go to the load balancer’s external IP, I see a simple website.

Overlays

Now let’s add a very simple overlay. An overlay (in Kustomize) is a kustomization that depends on another kustomization.

1.2├── base3│ ├── configMap.yaml4│ ├── deployment.yaml5│ ├── kustomization.yaml6│ └── service.yaml7└── overlays8└── dev9└── kustomization.yamlHere is overlays/dev/kustomization.yaml

1resources:2 - ../../base3commonLabels:4 variant: devLet’s look at the diff between the base and the overlay

1$ diff <(kustomize build base) <(kustomize build overlays/dev)28a93> variant: dev415a175> variant: dev624a277> variant: dev831a359> variant: dev1038a4311> variant: dev1243a4913> variant: devThat’s it—we just replaced the variant label with the value dev. Now let’s use it to actually make a change to our app. We’ll build another overlay, for the staging environment. Here’s our kustomization.yaml.

1namePrefix: staging-2commonLabels:3 variant: staging4resources:5 - ../../base6patchesStrategicMerge:7 - map.yamlAnd the new ConfigMap that we’re merging with the one in the base:

1apiVersion: v12kind: ConfigMap3metadata:4 name: monopole-map5data:6 altGreeting: "Now we're in staging 😏"7 enableRisky: "true" # italics! really risky!We can see the effects on our website

What else can we do with a kustomization?

Here’s a non-exhaustive list:

- Target multiple resource sources to form the base

- Add common labels or annotations

- Use CRDs

- Modify the image or tag of a resource

- Prepend or append values to the names of all resources and references

- Add namespaces to all resources

- Patch resources

- Change the number of replicas for a resource

- Generate Secrets and ConfigMaps, and control the behaviour of ConfigMap and Secret generators

- Substitute name references (e.g. an environment variable)

The more I think about this, the more obvious and appealing the idea is. Although I’m sure I have many of these experiences in front of me:

1template:2 metadata:3 labels:4 app: {{ template "datadog.fullname" . }}-cluster-agent5 {{- if .Values.clusterAgent.podLabels }}6{{ toYaml .Values.clusterAgent.podLabels | indent 8 }}7 {{- end }}8 name: {{ template "datadog.fullname" . }}-cluster-agent9 annotations:10 {{- if .Values.clusterAgent.datadog_cluster_yaml }}11 checksum/clusteragent-config: {{ tpl (toYaml .Values.clusterAgent.datadog_cluster_yaml) . | sha256sum }}12 {{- end }}13 {{- if .Values.clusterAgent.confd }}14 checksum/confd-config: {{ tpl (toYaml .Values.clusterAgent.confd) . | sha256sum }}15 {{- end }}16 ad.datadoghq.com/cluster-agent.check_names: '["prometheus"]'17 ad.datadoghq.com/cluster-agent.init_configs: '[{}]'18 ad.datadoghq.com/cluster-agent.instances: |19 [{20 "prometheus_url": "http://%%host%%:5000/metrics",21 "namespace": "datadog.cluster_agent",22 "metrics": [23 "go_goroutines", "go_memstats_*", "process_*",24 "api_requests",25 "datadog_requests", "external_metrics", "rate_limit_queries_*",26 "cluster_checks_*"27 ]28 }]29 {{- if .Values.clusterAgent.podAnnotations }}30{{ toYaml .Values.clusterAgent.podAnnotations | indent 8 }}31 {{- end }}Sources

- Declarative App Management

- Kustomize docs

Wow! You read the whole thing. People who make it this far sometimes

want to receive emails when I post something new.

I also have an RSS feed.